So, when I’m not sporadically posting articles here on Hypergrid Business, I’m at work covering enterprise artificial intelligence. This is how companies, non-profits, and government organizations are actually using AI. N

I’m not into the public relations BS that vendors are pushing, nor the get-rich-quick schemes from charlatans or equally questionable investment advice from stock analysts — I’ll write more about how to really invest in AI later — and not the doom-and-gloom disaster predictions which just serve to scare and paralyze. Also, I’m not a big fan of the singularity theory.

I’m not saying that none of those things will happen. We can still have a Skynet that kills all humans and we should probably pass some AI safety laws and I’m happy that there are people worrying about it.

But we can’t just focus on the worst-case scenarios, especially when there’s not much we can do about them, when there are much more likely things that can happen — things that we can, in fact, plan for and do something practical about.

So, anyway, that’s where I’m coming from.

And, today, looking back on 2025, I wanted to share what I see as the ten biggest AI developments based on their actual impact on corporations, business, and individual creators.

As someone who’s covered enterprise technology for over 20 years, I talk regularly with business executives about what they’re actually using, what’s working, and what isn’t, so this list isn’t just based on headlines or what ChatGPT tells you. It’s based on my actual experience with the technology and on my conversations with the people who use it. I just checked my database, and in 2025 alone, I connected with 545 sources, and conducted 228 in-depth interviews over Zoom or another video platform — or, sometimes, the telephone. The rest were email conversations.

Not all interviews made it into the final article. Sometimes, people would confirm what other sources had said, and I’d only quote one of them. And sometimes, the interview would veer off into an interesting area that I didn’t have room for in the article, but might eventually do a follow-up story on.

You can see all my recent AI-related stories here.

I’m starting with the story that has the biggest business impact and ending with the one that affects individual creators the most.

Open source AI dominates

For me, open source was the breakout star of 2025. DeepSeek released an open-source model this year that matched OpenAI’s performance at a fraction of the cost, temporarily dropping Nvidia’s market value by around half a trillion dollars. Other Chinese models followed, and, most recently, we saw Mistral, which is based in Europe, make waves with state-of-the-art models of their own.

You can see a video I recently posted about open source AI here:

The key advantage of open source is that the basic software is free and comes in multiple sizes — from models that rival ChatGPT down to smaller versions that run on personal computers or phones. You can customize them because the source code is open.

For corporations, this is often the only way to go if you need AI that stays completely within your company walls. If you’re not comfortable with OpenAI, Anthropic, Meta, Google, or Microsoft having access to your sensitive data, hosting your own open-source AI means your information never leaves your infrastructure.

Open source doesn’t advertise, but it’s been quietly eating the enterprise world for years.

According to the 2025 State of Open Source Report by Perforce, 96% of organizations increased or maintained their use of open-source software in the past year, with over a quarter reporting a significant increase.

Linux powers 96 percent of the top one million web servers and runs 100 percent of the world’s 500 fastest supercomputers — a position it’s held consistently since November 2017. All Android smartphones run a version of Linux. Chromebooks run a version of Linux.

In the enterprise, open source accounts for 55 percent of operating systems, 49 percent of cloud & container technologies, 46 percent of Web and application development, 45 percent of database and data management and 40 percent of AI and machine learning, according to the Linux Foundation’s 2025 state of open source software report.

It’s a story that gets almost no attention because open source projects don’t advertise and rarely make people into billionaires. But it’s transforming the world.

What makes this fascinating to me is that big tech giants — like Microsoft and Amazon — are having to embrace open source because their customers are pushing them toward it.

Since open-source models come in different sizes, anyone with a high-end gaming computer can run one at home. I’m currently recommending Mistral 3 — it’s European, has strong privacy protections built in — though if ask me next month I’ll probably have a new favorite because this industry changes so quickly.

Agentic AI changes everything

Regular AI chatbots answer questions. You ask, they think, they respond. But agentic AI represents a step-function improvement in capabilities.

Agentic AI is a structure built on top of large language models and other tools. You have agents powered by AI or traditional software working together. Some agents are orchestrators that create plans. Others delegate tasks to the best agent for each job. Review agents check the work and send it back if it’s not good enough, adapting the plan as new information comes in.

This capability is increasingly being built into platforms like Claude and ChatGPT. On the backend, there’s a whole conversation of different models, each with different areas of expertise — a mixture of experts combined with orchestration layers, different-sized models, and tools like web browsers, calculators, or financial lookup functions.

We saw the Model Context Protocol (MCP) get widespread adoption this year, which allows agents to interact with data sources using plain English commands. We even have payment standards now so agents can make payments, which is both powerful and slightly terrifying.

What’s unusual is how fast these standards are evolving. Anthropic released MCP just over a year ago, open-sourced it, and everyone immediately adopted it instead of creating competing proprietary standards. We don’t have the AOL walled garden situation. The basic infrastructure is standards-based, which makes it much easier for companies to deploy agents without feeling trapped or locked in.

Agentic AI isn’t something that makes a clear and obvious difference to individual users, at least right now. And many probably don’t even notice that they’re using agentic AI because it’s now getting buillt into the chatbots.

But in Zapier’s October 2025 survey of over 500 enterprise leaders found that 72 percent of enterprises are now using or testing AI agents, with 40 percent having multiple agents in production. Even more striking, 84 percent say it’s likely or certain they’ll increase AI agent investments over the next 12 months. I’m quooting them because they’re the most recent survey, even though they have a vested interest in the outcome — they now make AI agents. But I’ve seen similar results come out through the year from McKinsey, PwC, MIT Sloan Management Review and Boston Consulting Group, and from Google’s survey of over 3,000 senior business leaders that came out in September.

The numbers change slightly depending on who exactly the survey talked to, and what specific questions they asked but the general tendency is that enterprise use of agentic AI seems to be growing faster than any technological shift we’ve seen before.

Agentic AI is big because of how transformative it is. It isn’t just about answering employee questions or writing email drafts for them. Agentic AI takes over entire business processes with multiple decision points, disparate data sources, and lots of steps.

On a bit of a tangent, in my opinion, Google is now best positioned to take advantage of both agentic AI and generative AI in general. They invented generative AI with their 2017 Attention Is All You Need” paper, but didn’t do much with it initially. Instead, OpenAI took the ball and ran with it.

For a while, Google lagged badly. Gemini wasn’t good at first — their big conference presentation had an embarrassing mistake right on the front page. Their image generator wasn’t good, their chatbot wasn’t good, their coding assistant wasn’t great.

Then Google released Gemini 3 this past November. For a while — and in some benchmarks still — Gemini was the top model. They revamped AI Studio so you can now actually vibe code in it. Their Nano Banana image generator blew everyone away and it’s free. They also have Genie, a world model with an understanding of the physical world.

But beyond having world-class models, Google has something else in their favor: they make money. They make a lot of money. They have virtually unlimited funds to invest.

And they have an existing customer base bigger than pretty much anyone else. Everyone uses the Google search engine. Everyone uses Chrome. Everyone uses Gmail and YouTube and Google Docs and Google Street View and Google Translate. More than 70 percent of smartphones are Androids.

When you Google something — and everyone still starts with Google — their AI answers your question. When you ask your phone a question, Gemini answers. Why spend $20 on ChatGPT when Google’s right there?

They have cash flow, world-class models, developer tools, the Google Cloud Platform, all the infrastructure, and their own AI chip (the TPU) that competes with Nvidia. They have the hardware, data centers, cloud infrastructure…

A year ago, Google didn’t look golden. At the end of 2025, they kind of do.

Vibe coding opens software development to everyone

Andrej Karpathy coined the term “vibe coding” and it stuck. It’s become such a phenomenon that Collins Dictionary made it their word of the year.

AI developers building AI systems often use AI coding assistance to help them, so AI is already accelerating its own development. But vibe coding also opens code development to everyone.

A couple weeks ago, I vibe coded an app and put it online. It briefly made the first page of Google results. The app works perfectly and I still use it — a color palette generator with all the features I wanted in one place.

I couldn’t find an app that did everything I wanted, so I had Google make one. It took a few minutes and created the perfect app. Everything worked and it included features I hadn’t even thought to ask for. I spent about an hour tweaking it — moving things around, changing colors, adding my logo — but the basic app worked out of the box.

It wrote this app in a language I don’t even know. When I wanted to deploy it, I told it I have a DreamHost account and it walked me through creating a folder and uploading one file. That’s it. I had a working app.

Here’s a video I posted about it:

Now anytime I want to do something and don’t like the existing apps or they’re too expensive, I know I can vibe code a replacement. I don’t particularly want to because I’m a journalist, not a developer. But for someone just starting out who does want to do this for a living, it’s a fantastic tool.

A lot of people hate on it because it’s not perfect.

And it’s not perfect. But you test it — you can have it create test cases — then fix the problems, test again, and iterate. Making a working application is still a job. Marketing it, promoting it, providing support — that’s all still work. AI can help with it, but the work needs to be done. I just don’t want to do it!

But for someone who does — the barriers to entry have disappeared. It’s essentially free — free options are available everywhere.

AI for coding is typically the top use case companies mention when I talk to them. That’s where they’re already seeing big positive results.

Some companies are already starting to use vibe coding to create replacements for software they used to pay a lot of money for. I would hate to be a company right now that does nothing else but make overpriced commodity software.

Reasoning models that actually think

Several companies released thinking or reasoning models this year — o1 from OpenAI, Gemini 3 from Google — that can solve complex multi-step logic problems, win mathematics competitions, and tackle difficult problems in physics and science.

Anthropic has been releasing papers about how models do thinking internally, and it’s fascinating. They’re mapping neural networks and finding that areas light up when the model thinks about a particular concept.

The same areas light up regardless of which language you asked the question in, meaning the concept is separate from language.

This isn’t obvious if you think of AI as “autocomplete on steroids,” but it makes sense when you remember these are neural networks with multiple layers. The first layer is relationships between words. The next layer is relationships between those relationships. It’s about the patterns behind the patterns behind the patterns.

You can see the thinking process happen. If you give a model more time to think, it gives you a better answer.

It’s thinking through things, working forwards and backwards, saying “that doesn’t work, let’s change our approach.” Researchers can watch this happen in real time, like an MRI scan of the brain but for an AI model.

They can even make particular areas light up, and the AI becomes aware of random thoughts popping up — like solving a math problem when suddenly a picture of an elephant appears in its “head,” and it either incorporates that or dismisses it as irrelevant.

Very interesting stuff that makes you think about what thinking actually is.

For enterprises — and people — this means that the AIs can be used to tackle complex challenges that previously required expert humans.

Yes, this sucks if you’re one of those expert humans, but it’s great if you’re trying to get something done and there just aren’t enough experts out there. Like, say, if you’re trying to get nuclear fusion working or cure cancer or solve the climate crisis.

And I’m actually not all that sorry for the human experts. Read further and I’ll explain why.

The infrastructure investment boom

We saw the $500 billion Stargate project announced this year. Nvidia was briefly the world’s first $5 trillion company.

Companies are making huge investments in power generation, nuclear fusion, new energy sources, and even space-based data centers. In fact, I recently wrote an article about space-based data centers. It should be posting in the next few days.

Hyperscalers like Amazon and Microsoft are building. AI and software companies are building. Regular companies are building their own datacenters, or trying to rent them, because they want to run their own AIs.

People are getting upset about this but personally I think a lot of the outrage is overblown. Yes, there are climate impacts, but you can offset all your AI use by eating one less burger or making fewer trips to the grocery store or consolidating your Amazon deliveries to once a week. Our existing industries have a much bigger impact on the environment, but we aren’t as worried about it because it’s old news — and data centers are new news. Our brains are weird that way.

Personally, I’d be very happy to trade a cancer-causing, water-wasting industry like tobacco for a cancer-curing AI.

And yes, even those stupid video AIs are helping — making videos helps the AIs gain an understanding of how the world works, how cause-and-effect works.

Small models change everything

Yes, models are getting bigger all the time — billion-dollar models, huge thinking models. But we’re also getting really small models that run on your computer or phone.

This means people have AI with them everywhere they go. They already do — you have an AI system on your phone now. But this is going to change people’s lives once we start depending on them.

People are already getting too caught up with AI companions, going down delusional spirals. We’re going to see more of it. Every new technology has unexpected side effects.

But it might change society for good too. How will the world change when everyone has an AI assistant in their hand or ear? Think how the world changed when everyone had GPS, Google Translate, or instant access to any information they needed at their fingertips.

Social media had a huge impact. Google had a huge impact. What will the impact be of having an AI assistant everywhere you go?

We don’t know yet. It’s going to change society, business, how people interact.

If you want to start planning ahead for your career — or for your business — this is a good thing to think about.

I know I’m going to be thinking about it a lot this coming year…

The workforce paradox

The headlines scream about layoffs everywhere, nobody finding jobs, AI taking all the work, everyone unemployed.

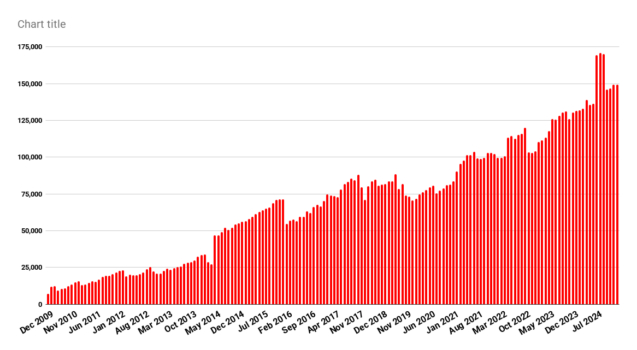

According to Challenger, Gray & Christmas, there were over a million job cuts in 2025, and 54,694 were directly attributed to AI. According to MIT, 11.7 percent of jobs can already be replaced by AI. According to the IMF, 60 percent of jobs in developed countries are exposed to AI.

We’re told we’ll need universal basic income because AI will take all the jobs.

But the actual numbers show the opposite.

Unemployment is still at record lows, below 5% — we’re still at functionally full employment. Jobs are still being created. When a company lays off a thousand people, that’s news. When a thousand companies hire one person each, that’s not news.

This is true even for jobs in fields that are most affected by AI. According to a December Vanguard report , the number of jobs in the 100 occupations with the highest AI exposure grew by 1.7 percent this year — compared to 0.8 percent for all other occupations — and salaries increased by 3.8 percent after inflation, compared to 0.7% for everyone else.

The World Economic Forum predicts a net employment increase of 78 million jobs by the end of this decade.

Here’s a video I did about this:

Totally counterintuitive, but it makes sense. In every technological revolution with major productivity increases, we had major changes in job types but not a net loss of jobs. Here’s a research paper about how this happens.

Ninety-five percent of us used to be farmers. Now only 5% are. Industrialization destroyed 90% of jobs. But we didn’t end up with 90% of people sitting around doing nothing. We all have jobs doing weird stuff nobody predicted.

Professional sports — paying adults to play children’s games — is a whole industry now. Who’d have thunk it?

We create new needs and wants constantly. And some of those needs and wants can be handled by AI, sure — but for others, we want humans. We care about what people think, we care about original art, live concerts, human interactions. We’re social animals.

We could eat a microwave meal, but we pay extra to go to a fancy restaurant, wait for a table, have a limited selection, small portions, expensive food that doesn’t even taste that good — all because we enjoy the experience. Having another human feed us goes to a core biological imperative.

Any job involving core human needs — creativity, self-expression, communication, making things with our own hands — we want to keep doing. Gardening is a huge industry. We don’t have to grow our own food, but we enjoy it and pay money to do it. And we pay for gardening classes, and support local nurseries, and hire landscape designers. All of these kinds of jobs will only increase.

Plus, as our total capacity as a civiliation increases, we’ll have more excess capacity to invest in cleaning up the environment, curing cancer, going to space, whatever we want. There will be new jobs in all these areas. Very cool new jobs.

I think AI is going to be a net positive for humanity — if it doesn’t destroy us all, of course, and if we can handle the political and social disruptions.

That’s the middle case — not the singularity, not doom and gloom. In my opinion, this is the most likely scenario and one that we can actually do something with. (Though we should also make some phone calls about regulating AI and improving the social safety net so that we can handle the disruptions.)

Generative user interfaces

Generative user interfaces are really cool and we haven’t seen much yet of this yet, but I’ve seen demos. Instead of using software someone built for you, AI vibe codes it on the fly.

So this isn’t a technology that’s made a big impact on business in 2025, but it’s a technology that was invented in 2025 and will have a huge, huge impact going forward. It should be a much bigger story than it was, but I think it got lost among all the other huge things that happened with AI this year.

Here’s how it works.

I want to write an article. My AI quickly creates a word processor for me. Then I want to run a spell check, and oh, no, my word processor doesn’t have this function! No fear, my AI notices that I’m trying to do this and quickly writes a spell-checking program for me and spell-checks my story — instead of having the function built in ahead of time. Over time, my entire workspace becomes customized around me, exactly for what I need, when I need it, how I use it.

For companies, instead of having to adjust their business processes around the enterprise software they use, the software will adjust itself automatically around their processes. And not just for the company as a whole, but for individual departments, even individual employees — and even all the different tasks that employees are doing.

This on-the-fly, context-aware, real-time software development is a totally new software paradigm that will completely upend the software ecosystem. Companies with strong software components should seriously start planning for when this hits.

It won’t be instant. People’s behaviors change slowly. I’ve been using FileMaker database software for 30 years because I like it and habits die hard. We still have print newspapers and magazines. Things change slowly.

But in areas where people are building new things or something doesn’t work, that’s where change happens. If you’re a company that wants to grow, you need to look at this as a serious potential impact.

To find out more, check out Google’s A2UI, an open project for agent-driven interfaces. Or GenOS. Or C1 by Thesys.

Content training is fair use

This one affects me most as a content creator. I’ve been writing for tech publications for 20 years in a very standard style — neutral, authoritative, lots of em dashes and three-part structures. AI has been trained on my writing. I’m probably part of why ChatGPT likes long em dashes and three-part structures.

The question is whether AI companies have the right to train on our content without permission. Recently, Anthropic agreed to pay $1.5 billion to authors for using their work — but this wasn’t a massive win for authors. Yes, they got a little bit of money. But it was a massive win for AI companies.

The authors are getting paid because Anthropic downloaded pirated books in an obviously illegal way. Then they stopped doing that, bought used books, scanned them, and used those for training.

According to two big court cases settled in June, the one with Anthropic and the other one with Meta, it’s not the training that’s the problem. It’s the illegal downloading.

As long as data is collected legally, it’s okay to train on it. Since then, I’ve seen an explosion in AI crawlers on the web.

I run a couple of online magazines — Hypergrid Business and MetaStellar — and had to update all the anti-bot rules, DDoS protections, upgrade Cloudflare, upgrade server capacity — because we were flooded with bot crawlers. Anything online is fair game now. And we’re still seeing performance issues and are having to keep tweaking things and file support tickets.

And it’s not just those two court cases, though those are the biggest ones. Most cases have been going in that direction. Copyright holders need to be compensated in some jurisdictions, but for the most part, all we’re seeing are huge wins for AI companies.

It’s like Google News grabbing headlines and the first paragraphs of stories — it’s considered fair use because it’s transformative. AI is transformative because it doesn’t save and distribute the actual content, just the patterns behind the patterns that it learned.

If you ask for an exact copy of the latest Stephen King book and AI gives it to you, that’s copyright infringement. But AI companies don’t do that. They save the ideas in the book, not the book itself. And you can’t copyright ideas.

It’s like reading a book with normal human memory and retelling the story in your own words based on your understanding. The courts have ruled this is transformative and totally fine.

If you’re a content creator hoping to defend yourself against AI, there’s really nothing you can do if your work is already out there. Unless you bury yourself in a hole and stop distributing anything, if an AI company gets a copy, they can use it for training.

This has to be handled politically. We need new laws, world treaties like we have for copyright. The big companies will comply because fines can be significant, like with GDPR.

If this matters to you, get involved with groups lobbying for those changes.

If this is an issue of top concern for you, first, use fairly-trained AIs. Adobe’s Firefly is only trained on licensed data, and they pay artists. Check out Fairly Trained for more AIs that treat content creators fairly.

Personally, though, I’ve decided not to worry about it. I have no impact on court cases. My only impact on legal protections is voting, and I’ll do so when I get the opportunity.

But I’m not going to take my stuff down from the Internet just to spite some AI companies. First, they won’t notice and won’t care. Second, they’ve already got everything. Third — and this is actually most important to me — I want people to be able to find my stuff when they ask AIs about it.

I believe AI is going to be like Google Search. Yes, it’s annoying when Google scrapes your website and doesn’t pay you and just tells everyone what the story is and what it’s about. But without Google Search, nobody would even know your website exists.

Same with AI. I want AI to tell people about my writing and about my ideas. To do that, it has to know who I am and what I’ve written.

And I’m not going to hold my breath for any payment. If we creators get anything, it’s going to be miniscule and more symbolic than anything else.

I’d rather spend my time and energy figuring out a way forward. What will be my value in the coming years? What do I, as a human, offer that AI can’t?

Do you agree with any of this? Disagree? Leave a comment! Or — better yet! — watch my YouTube video and leave a comment there!